Simplismart

Enterprise AI infrastructure for scalable model deployment

Ready to see Simplismart in action?

Book a personalized demo with our team.

Overview

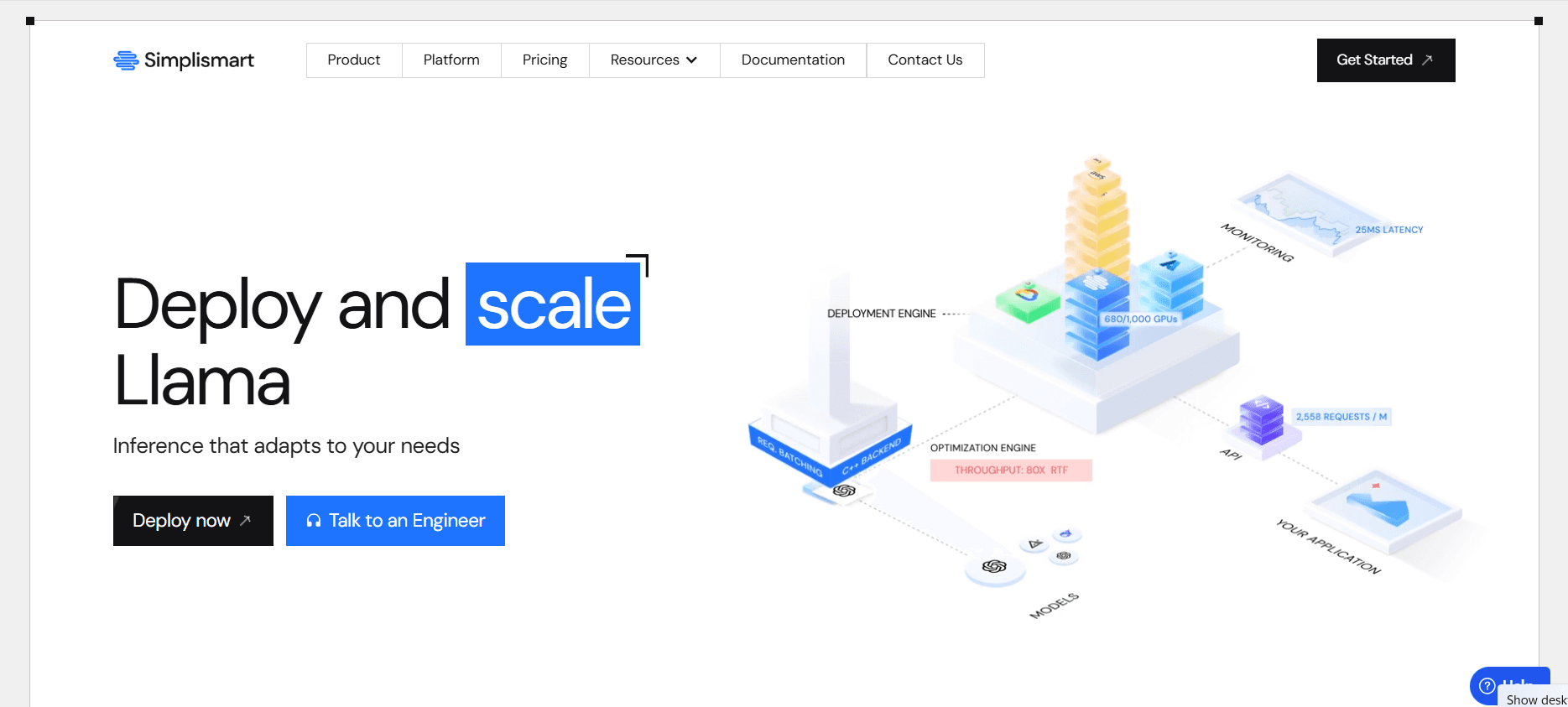

Simplismart delivers an end‑to‑end MLOps solution with a proprietary inference engine designed for low latency, high throughput, and cost‑efficient AI operations. Its flexible infrastructure supports both pay‑as‑you‑go shared endpoints and dedicated environments, including private clouds and on‑premises deployments. Users can fine‑tune, benchmark, monitor, and deploy models across modalities such as LLMs, speech, image, and multimodal systems. Enterprise‑grade autoscaling, observability tools, and security compliance make it suitable for production workloads.

Who It's For

AI/ML Engineers, Data Science Teams, Enterprise IT, Developers, Product Teams

Key Strengths

Primary Use Cases

Technical Specifications

Supported AI Models

Deployment Methods

Supported Languages

Security & Compliance

Company Information

Location

San Francisco, USA

Company Size

11-50 employees